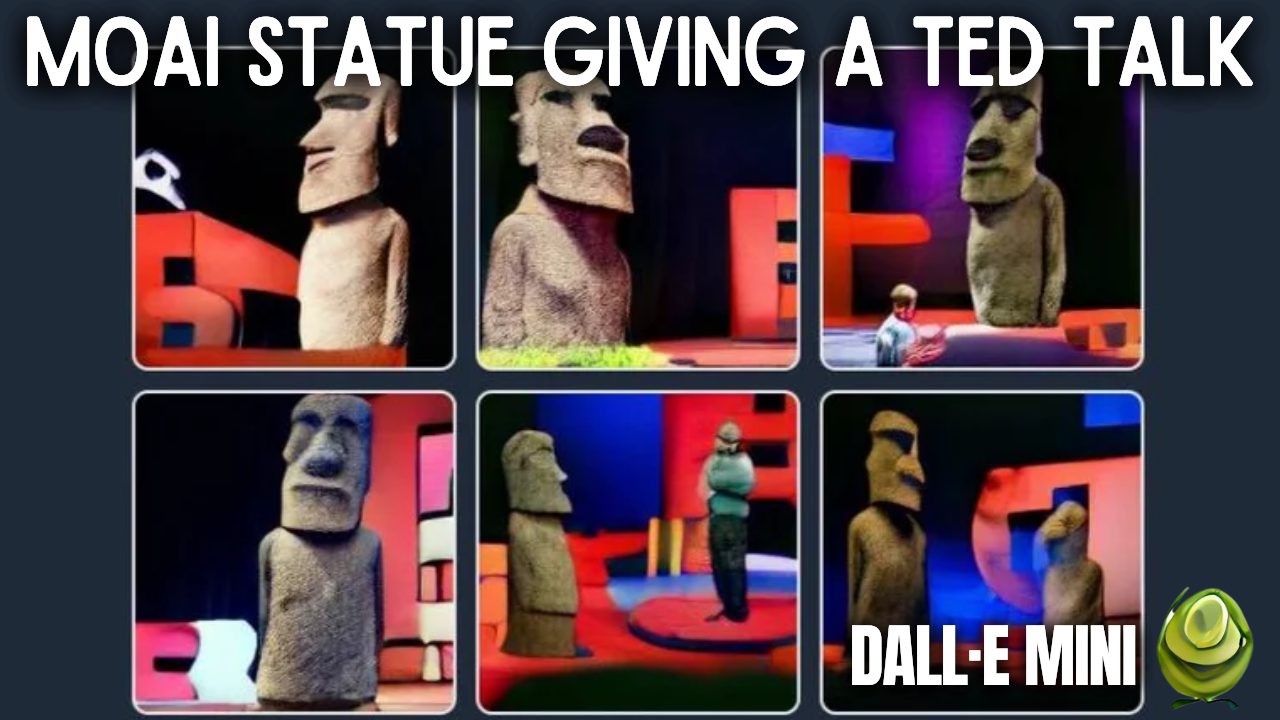

Dalle mini is amazing — and YOU can use it!

I’m sure you’ve seen pictures like those in your Twitter feed in the past few days. If you wondered what they were, they are images generated by an AI called DALL·E mini. If you’ve never seen those, you need to watch this video because you are missing out. If you wonder how this is possible, well, you are on the perfect video and will know the answer in less than five minutes.

Dalle mini is a free, open-source AI that produces amazing images from text inputs. Here’s how it works:

Watch the video

References:

►Read the full article: https://www.louisbouchard.ai/dalle-mini/

►DALL·E mini vs. DALL·E 2: https://youtu.be/0Eu9SDd-95E

►Weirdest/Funniest DALL·E mini results: https://youtu.be/9LHkNt2cH_w

►Play with DALL·E mini: https://huggingface.co/spaces/dalle-mini/dalle-mini

►DALL·E mini Code: https://github.com/borisdayma/dalle-mini

►Boris Dayma’s Twitter: https://twitter.com/borisdayma

►Great and complete technical report by Boris Dayma et al.: https://wandb.ai/dalle-mini/dalle-mini/reports/DALL-E-Mini-Explained-with-Demo–Vmlldzo4NjIxODA#the-clip-neural-network-model

►Great thread about Dall-e mini by Tanishq Mathew Abraham:

https://twitter.com/iScienceLuvr/status/1536294746041114624/photo/1?ref_src=twsrc%5Etfw%7Ctwcamp%5Etweetembed%7Ctwterm%5E1536294746041114624%7Ctwgr%5E%7Ctwcon%5Es1_&ref_url=https%3A%2F%2Fwww.redditmedia.com%2Fmediaembed%2Fvbqh2s%3Fresponsive%3Dtrueis_nightmode%3Dtrue

►VQGAN explained: https://youtu.be/JfUTd8fjtX8

►My Newsletter (A new AI application explained weekly to your emails!): https://www.louisbouchard.ai/newsletter/

Video Transcript

0:00

i’m sure you’ve seen pictures like those

0:02

in your twitter feed in the past few

0:04

days if you wonder what they wear they

0:06

are images generated by an ai called

0:08

dali mini if you’ve never seen those you

0:11

need to watch this video because you are

0:12

missing out if you wonder how this is

0:14

possible well you’re on the perfect

0:16

video and will know the answer in less

0:18

than 5 minutes this name dali must

0:21

already ring a bell as i covered two

0:23

versions of this model made by openai in

0:26

the past year with incredible results

0:28

but this one is different dalimini is an

0:31

open source community created project

0:33

inspired by the first version of delhi

0:35

and has kept on evolving since then with

0:38

now incredible results thanks to boris

0:41

daima and all contributors yes this

0:43

means you can play with it right away

0:46

thanks to hugging face the link is in

0:48

the description below but give this

0:49

video a few more seconds before playing

0:51

with it it will be worth it and you’ll

0:54

know much more about this ai than

0:55

everyone around you at the core dali

0:58

mini is very similar to delhi so my

1:00

initial video on the model is a great

1:02

introduction to this one it has two main

1:04

components as you suspect a language and

1:07

an image module first it has to

1:10

understand the text prompt and then

1:12

generate images following it two very

1:14

different things requiring two very

1:17

different models the main difference

1:18

with delhi lie in the models

1:20

architecture and training data but the

1:22

end-to-end process is pretty much the

1:24

same here we have a language model

1:27

called bart bart is a model trained to

1:29

transform text input into a language

1:32

understandable for the next model during

1:34

training we feed pairs of images with

1:36

captions to dalemini bart takes the text

1:39

caption and transforms it into discrete

1:42

tokens which will be readable by the

1:44

next model and we adjust it based on the

1:46

difference between the generated image

1:48

and the image sent as input but then

1:51

what is this thing here that generates

1:54

the image we call this a decoder it will

1:57

take the new caption representation

1:59

produced by bart which we call an

2:01

encoding and will decode it into an

2:04

image in this case the image decoder is

2:07

vqgan a model i already covered on the

2:10

channel so i definitely invite you to

2:11

watch the video if you’re interested in

2:14

short vkugen is a great architecture to

2:16

do the opposite it learns how to go from

2:19

such an encoding mapping and generate an

2:22

image out of it as you suspect gpt3 and

2:25

other language generative models do a

2:27

very similar thing encoding text and

2:29

decoding the newly generated mapping

2:32

into a new text that it sends you back

2:35

here it’s the same thing but with pixels

2:37

forming an image instead of letters

2:40

forming a sentence it learns through

2:42

millions of encoding image pairs from

2:45

the internet so basically your published

2:47

images with captions and ends up being

2:50

pretty accurate in reconstructing the

2:52

initial image then you can feed it new

2:54

encodings that look like the ones in

2:56

training but are a bit different and it

2:59

will generate a completely new but

3:01

similar image similarly we usually add

3:04

just a little noise to these encodings

3:06

to generate a new image representing the

3:08

same text prompt and voila this is how

3:12

dali mini learns to generate images from

3:14

your text captions as i mentioned it’s

3:17

open source and you can even play with

3:19

it right away thanks to hugging face

3:22

of course this was just a simple

3:24

overview and i omitted some important

3:26

steps for clarity if you’d like more

3:29

details about the model i linked great

3:31

resources in the description below i

3:34

also recently published two short videos

3:36

showcasing some funny results as well as

3:38

a comparison results with daily 2 for

3:40

the same text prompts it’s pretty cool

3:42

to see i hope you’ve enjoyed this video

3:45

and if so please take a few seconds to

3:47

let me know in the comments and leave a

3:50

like i will see you not next week but in

3:52

two weeks with another amazing paper

3:55

[Music]

4:14

[Music]